Roll up, Roll up, everyone’s a winner

I’ve managed the optimisation function at a number of companies, and I’ve realised there are a few truths. One is that every test is a winner.

In fact, my track record is about 2/3 of all tests, the hypothesis was proven correct; that is, what we thought would win ends up winning. Those where the hypothesis wasn’t validated, we still learnt what not to do and to flow those insights into future tests or other customer experiences on other digital touchpoints. So there you go, every test is a winner.

But first, let’s start with the basics .

So, what is Optimisation?

Optimisation of a website or digital channel is all about making improvements to the customer experience so that they are more likely to stay on your website and engage with the content. This increased engagement can tie in directly to more satisfied customers and sales. Optimisation is also known by a few other names including A/B testing, multivariate testing or even CRO (Click Rate Optimisation).

How it works is that a proportion of customers (e.g. 50%) will get experience A and the other group will get experience B and their behaviour is tracked against an activity like registering, buying, downloading or any online task. The winning result is based on what activity is undertaken the most once it reaches statistical confidence. This is usually around a 95% confidence level so that if the same test was repeated 19 times out of 20 times, then the same test would win.

Where do you start?

Finding the right use cases or tests is a great start and then the next stage is to group them. These tests will help in gaining support and driving impetus. You could set out to run a 2-3 month pilot setting benchmarks and key objectives.

But don’t forget it’s the people who are critical in making this happen and they need to have a clear understanding and the right energy to not only make it happen, but also to tell the story of what’s happening.

Types of changes worth testing

What’s tested could include:

Content – videos, blogs, testimonials, articles

Design and layout – icons vs words, banner size and shape, changing call to actions (CTAs)

Navigation Information Architecture – names, orders

Features or capabilities – customer ratings on pages

Delaying processes – do you need to capture that customer’s information upfront if it’s driving fallout?

Supporting new ideas or Minimal Viable Products (MVPs).

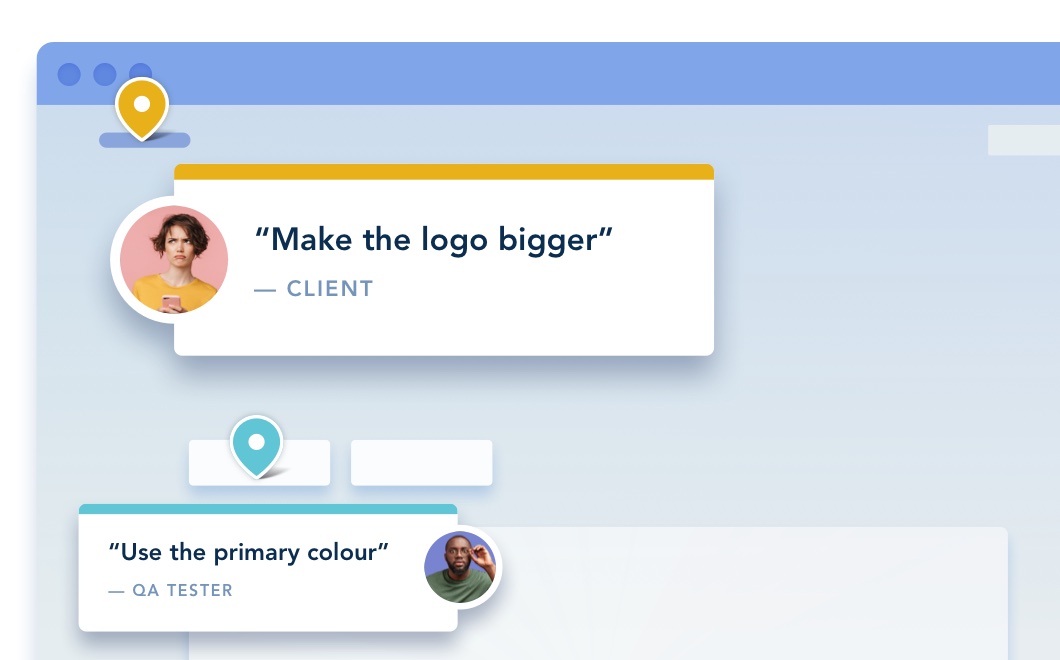

I’ve also used A/B testing to sooth heated political debates. For example, in one case there was a bitter divide between two opposing sides on a key homepage design element. By testing both experiences we found a clear winner that settled the debate.

Effort vs reward

It does take time to set up a program along with running tests. What’s exciting is seeing the results come in. And there’s no denying the learnings that follow. What’s more, it’s the customer who decides the best approach by voting with their clicks. You can’t argue with that.

It’s also very important that these test findings are shared and communicated because the rewards can be significant to the bottom line. I’ve seen an ROI over a number of years exceeding 400%.

I’ve also seen the results of not running tests on sites along with making no changes. A decline of 10-15% year on year. So testing encourages making changes to the website and making sure they are good ones.

What tools are out there?

There are many online solutions. In fact, possibly too many! Some are enterprise level and there are many that can be tried on smaller websites and are cheap or even free. Do a search if you’re not sure. I did come across this comparison site but there are many others.

I’ve used a number of A/B testing solutions including Adobe Target and Optimiziley. I rate both tools and finding the right solution will depend on what your needs are along with the technology stack in operation and planned.

It has never been more important

Making improvements with how customers engage with businesses has never been more important. Australians spent over $36B on online retail in the last 12 months to June ’20 (NAB Online Sales Index). This is a 23.1% increase on the previous year.

So maybe start small to find tests that will not only improve the experience for customers, but improve the value they provide to the business.

And don’t forget to roll up, roll up, as every test’s a winner.